Don’t Spend Effort on Personalization if You Aren’t Going to Measure It

Personalization is talked about a lot. There is an expectation from users to deliver a tailored, hyper-relevant experience for them that fulfills their needs quickly with more relevancy.

That may seem like a great goal, however, you should not operate with the assumption that more personalized equals better. Personalization should still be treated as experience optimization which means always ensuring you are measuring your personalization ideas so you can be data driven in your personalization decisions.

At Optimizely, we have a lot of customers who utilize both a/b testing and personalization. We also have a lot of customers who ask us: “where do I start now that I have both? Which will give me the best return on solving our customer problems? How do I know which is best?” To answer these questions, let me start by defining my view, as Optimizely’s Lead Strategy Consultant, on what “experimentation” means.

An experiment is strictly defined as the procedure carried out under controlled conditions in order to discover an unknown effect. Though this has a unique application for your products and experiences, I find this scientific definition to hold pretty true. So, when we talk about an experiment related using Optimizely’s digital experience platform, it really is an experiment no matter what capability you are using (notice how each is focused on delivering the best experience for our users):

- A/B test or Feature Test – understand the best average experience for all (or large segments) of users

- MVT – understand which variables delivered the best experience for all (or large segments of users) of users

- Personalization – understand what the best unique experiences are based on specific criteria of a segment of users

- Rollout – understand the impact of a new feature or experience with lower risk

As you start to run personalization campaigns you should be approaching them as you would an experiment. You should frame your idea as a hypothesis and set clear success criteria against the metrics that are important. And yes, that means it all starts with a problem statement.

Here’s a few examples of problem statements to make it a bit more real:

- Retail – Our past purchasers consistently order in the same price range and have a lower product view rate when we show products not related to their past purchases.

- B2B – Our ad campaign traffic has a 10% higher bounce rate than our other primary channels and converts to a lead in only 2% of visits.

- Travel – Our loyalty members have shared in voice of customer surveys expect us to remember their most used origins in their searches.

Now let’s turn these into hypotheses related to personalization:

- Retail – If we displayed a banner on the homepage that has a CTA for directing to the related price category page for users based on their past purchases’ total value, then we will increase product view rate and, in turn, purchases.

- B2B – If our landing pages where our ad campaign traffic arrives mirrored the same value props, offers, and CTAs seen by the specific ad, then we will decrease bounce rate and, in turn, increase the leads generation rate.

- Travel – If we pre-populated the homepage search ‘Origin’ field for loyalty members based on their most recent searches, then we will increase search rate and, in turn, bookings.

Looking at the retail problem statement, this could be built as an Optimizely personalization campaign by defining audiences that tie to the experiences you want to deliver. By making unique audience conditions that would qualify a user for a specific related experience. As an example, I might qualify for the ‘High Value Shopper’ audience if I have spent $200 in the last month. While the audiences and experiences are different, each are measured against the same metrics that you define for the overall campaign.

Turning our problem statements into hypotheses will give us an indication if personalization is even the right avenue to solve the problems at hand. When doing personalization in Optimizely, you still have Stats Engine to provide you statistical rigor and a results page to interpret and then share the information to stakeholders. You still can confidently walk into a meeting with your stakeholders and tell them “yes this works, let’s iterate” or “I don’t think personalization helps us here, what other ideas do you have?” Who knows, maybe an a/b test would better serve this problem!

How do you know what is a good candidate for an a/b test versus a personalization campaign when you are looking at all of the problem statements you are looking to solve through experimentation? These are the triggers I’m looking for when I think we may have a personalization campaign on hand:

- Our problem statements are focused on the same part of the experience, but the supporting data for each is different based on particular audience segments

- Our solutions for the problem statement are different based on particular audience segments

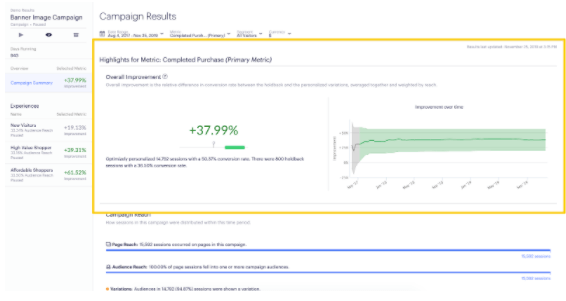

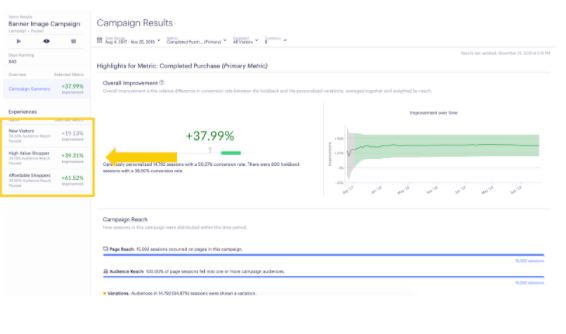

Using our retail example, you can see what results could look like below. We’ll be using Optimizely’s personalization results page as it provides the ability to vet a top-line hypothesis and hypotheses specific for an audience which can give you clarity on expanding the original hypothesis to other audiences or part of your business. These results can also tell you when the original hypothesis (or maybe personalization at all) is not the right experience by looking at the summary results page, which shows performance on your primary metric across all the audiences in your campaign.

However, you can see highlighted in yellow above that if we measure against all visitors (regardless of audience) that were in this Optimizely personalization campaign we had a statistically significant improvement of 38% on our purchase conversion revenue when measured against the holdback. That’s great! By including measurement in our personalization campaign, we know that we improved the experience for a large set of users and that this personalization strategy should be experimented with more audiences or other parts of our business.

If we continue to dig into the data and look at the individual audiences, you see that New Visitors was not a statistically significant win on purchase conversion, while both High Value Shoppers and Affordable Shoppers were. It wasn’t a loser, but maybe we don’t focus on serving them a banner upon entry to solve this problem. By being data-driven, we can instead go back to our solutions and try something new.

If you are looking to start leveraging both testing and personalization, I’d encourage you to maintain the ideas for both in the same backlog. Prioritize them against each other and view them all as hypotheses. Personalization may have other steps (i.e. more time in the asset creation process), but they should all be viewed as a way to confidently solve customer problems. Think about keeping your next hypothesis workshop open to both testing and personalization next time you run one.

As you start out with personalization and you are looking to answer “where do I start?” start from the same spot you have been now: create your problem statements and measure like you have been already with your testing. Without measurement on your personalization campaigns, you are chasing something that is an ideal and not necessarily solving your customer problems. Learn when personalization works and iterate on why it did to develop your company’s personalization strategy. Your customers are all different and personalization isn’t a guarantee to be the best solution!