3 Ways to Increase Retention with Experimentation

At BiggerPockets, we had been aggressively A/B testing our signup funnels in Optimizely for a little over a year, and have seen success – improving our free signup funnels by over 80%.

Once we had our acquisition running well, we decided to focus our experimentation efforts on how to retain these new signups to maximize the benefits of this influx of new users.

BiggerPockets is an online resource for real estate investors, with educational content and tools designed to help people seeking financial freedom through real estate investing. I run our conversion-rate optimization efforts, focusing on the KPIs and conversion funnels that are most important to the business.

When we started focusing on retention, we quickly learned that testing how to retain users is an entirely different beast than testing signup and conversion funnels. So, to give you a head start if you’re looking to tackle retention, here are the biggest testing obstacles we ran into and how we overcome them.

To start, what makes impacting and measuring retention via experimentation difficult?

Problem: Lag between ending experiment & seeing retention impact

One of the trickiest things about retention tests is the lag between ending the experiment and receiving all the data you need to make a decision. In most A/B tests, the starting event and the final conversion event occur within the same session. In retention tests, the conversion events you are looking to measure will likely occur weeks or even months after the initial starting event. There will be a lot of additional touchpoints between the experiment triggering and the conversion event related to retention. This makes it very hard to test rapidly and have a clear control experiment.

Solution: Identify user behaviors that correlate to retention downstream and move those metrics

Given how down-funnel retention is at most companies, it is helpful to shift from retention as your key metric (which will have many other inputs outside of the experiment) to identifying which behaviors, that can be moved by experimentation, lead to higher retention. This allows you to run smaller tests to impact the user events that correlate with the user being retained down funnel. If you need help with thinking about how to approach this, a goal tree is a great place to start.

BiggerPockets Forums

For example, at BiggerPockets, one of our key product features is our real estate forums. Based on retention analysis we’ve done in Amplitude (our product analytics tool), we know that people who post on the forums are much more likely to become active users on our site. Rather than testing “does prompting users to post on our forums increase 4-week retention?”; we could easily simplify this by testing the best way to get users to post in the forums during their first week after signing up.

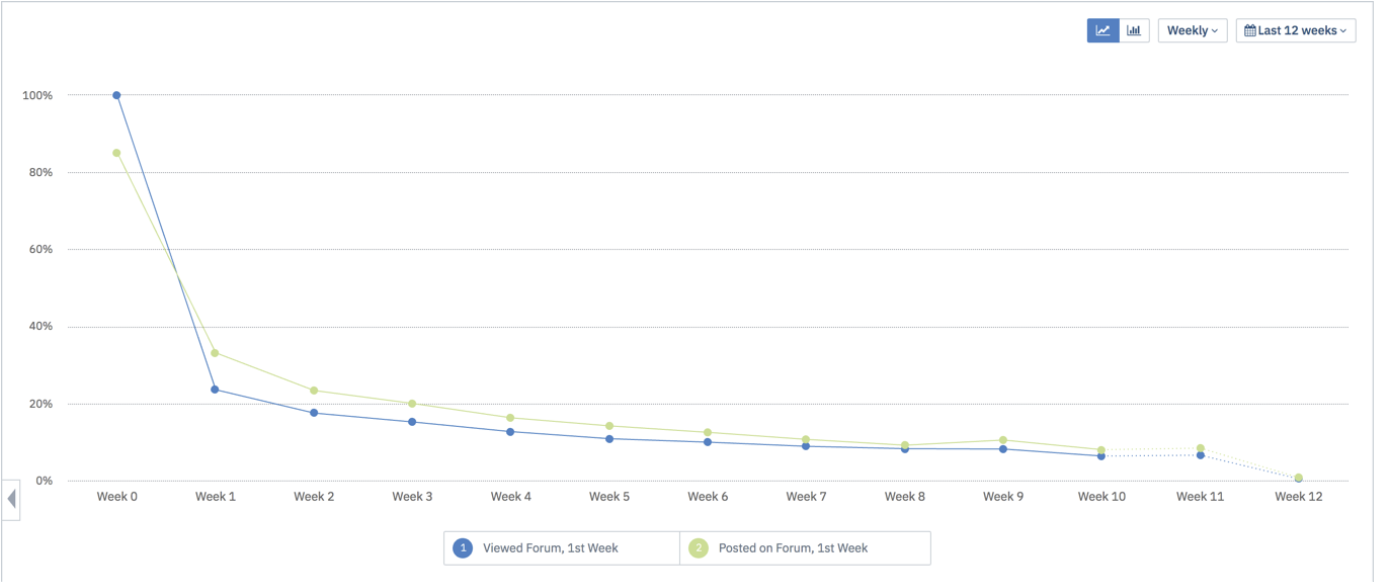

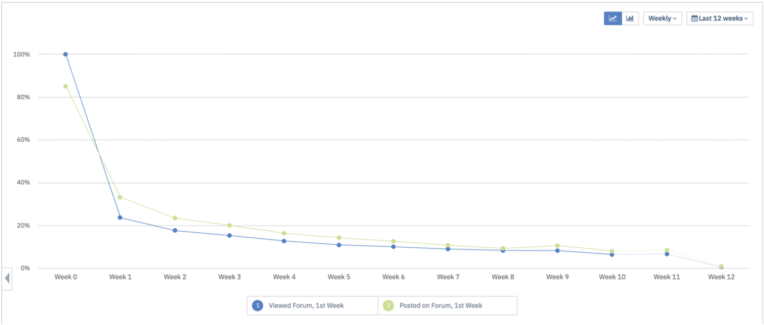

So, we can hypothesize that if we get more users to post in the forums, they are more likely to come back. Our retention data only shows that forum posting is correlated with higher retention, not that it necessarily causes higher retention. In order to verify this, we can run the experiment and then do retention analysis in Amplitude on the test cohort in a month or so to confirm that those exposed to the experiment variations were retained at a higher rate than the control.

This is what a retention analysis looks like in amplitude. The cohort of users that posted in the forums in their first week (green line) has higher retention.

Problem: Many inputs impact retention

Typically in A/B testing, we measure conversion from a starting event to an ending “conversion event.” For example, on a checkout page test you might measure conversion from loading the checkout page (starting event) to submitting an order (conversion event). With retention, there isn’t a clearly defined conversion event on the same page.

For example, if you want to test the retention of users who just signed up for your website, you don’t necessarily care if they came back the next day, two days later, three days later and so on. What you likely care about is whether or not they came back repeatedly and steadily over time. You might aggregate this into a single metric such as “user came back 3+ times in the first month.” However, figuring out how often a user needs to come back to your site to be retained can be tricky. To gain a deeper understanding of how to pick a retention metric that’s meaningful for your product, I recommend checking out this blog article

Solution: Focus each test on a single stage of retention

Remember this formula: Retention = (activation * engagement *resurrection) where:

- Activation — User gets started with your product

- Engagement — User repeatedly engages with the core features of your product

- Resurrection — Users come back to your product after not using it for a period of time

I highly recommend limiting tests to a single stage of retention (activation, engagement, or resurrection). The smaller the time gap between the user performing the starting event and the conversion event, the faster you will get learnings on your test and the less these conversions will be clouded by external factors. For these types of tests, it helps to remember that retention is the output, not the goal. The goal is for users to have a valuable experience with your product.

You can see this while testing your user onboarding flow, rather than test whether a change in onboarding leads to a retained user, test whether a change to onboarding helps a user figure out how to get started using your product in the first 7 days. Once users have started using the product then you can test ways to drive return visits. Which leads me to my next point….

Solution: Optimize to your product’s natural frequency

“Natural frequency” refers to how often your customer naturally encounters the problem that your product solves. For example, one of BiggerPockets’ customer’s problems is that they have a specific landlording question they want to pose to other landlords who have encountered their situation. We expect most landlords will encounter this problem about once per month, making their natural frequency for forum posting about monthly.

Trying to optimize to an unnatural frequency generally results in you spinning yourself in circles while spamming your users with notifications to entice them to return to your site. It doesn’t help your users find value in your product and hurts your relationship with them long-term.

For example, if BiggerPockets were to optimize for daily active forum posters, we would be testing the wrong frequency. Most of our customers own 0-5 rental properties, and those real estate investors should not have daily questions popping up. If they are posting questions on our forums daily, it seems likely that they are actually not getting their problem solved. Instead, I would imagine they are having to repost their question quite often in order to get an answer.

Identifying your product’s natural frequency helps you determine how often customers should come back to your website (for more on determining your product’s natural frequency, check out this article). From a testing perspective, testing to the right natural frequency helps you identify a single KPI that you can treat as the conversion event (e.g. did the user come back at least once in the first 7 days), making it simpler for you to measure the statistical significance of your tests.

Problem: User behavior differs widely

We have found that understanding user behavior is more complex for retention. While all of your customers might use the checkout page in a similar manner, the content or feature that motivates them to return to your website can work well for one customer type and be a disaster for another. This means your test analysis has to be detailed and nuanced.

Solution: Have a clear hypothesis, no matter what

As retention testing gets more complex, it can be tempting to make the hypothesis a box that gets filled in retroactively after a test is launched. I can’t stress enough how important it is to ALWAYS clearly state your hypothesis before an experiment.

The danger of not being clear on your hypothesis before launching the experiment is that you reach the end of the test and realize you have nothing to learn other than “experience X was worse for customer Y than experience Z”, without really understanding why you thought users would react differently (or which users you thought would act differently).

This is especially important if you are working with a team, when people don’t always speak up if they don’t understand what’s being tested.

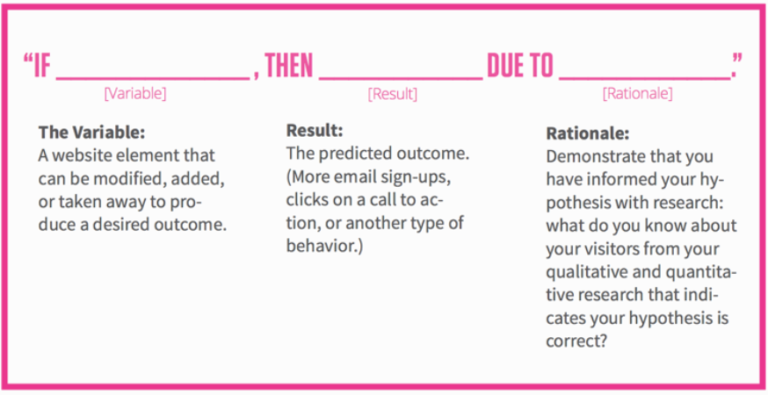

In order to build strong hypothesis I recommend following Optimizely’s “If ____, then ____, because ____.” hypothesis framework.

I’ll see you again in a few weeks when I’ll be sharing best practices for experimentation on global navigations!

If you are interested in continuing the conversation find me on linkedin or reach out to me directly at alex@biggerpockets.com.And if you’re ready to get started with experimentation, reach out to us today.